- Published on

Extending RAG with Dynamic SQL Queries

In my previous post Extending RAG with database tools, I discussed how a Retrieval-Augmented Generation (RAG) system can be enhanced with plugins that handle specific queries using predefined functions. While this approach works well for a limited set of query types, it becomes difficult to scale as user queries become more diverse and complex.

In this post, I’ll explore how we can use a plugin to generate dynamic SQL queries from natural language, enabling the AI assistant to answer a much broader range of questions.

Why do we need dynamic query generation?

Let’s revisit the bike store example - We relied on a set of functions like get_bike_price, get_bikes_by_type, and get_top_selling_bikes to fetch data.

But what if a user asks:

[user]: Show me the list of top selling bikes, sorted by price descending,

and only those available in stock.

It’s not practical to create a dedicated function for every possible query. Instead, we need a way for the AI to translate natural language requests into SQL queries on the fly.

Using a Natural Language to SQL Plugin

To solve this, we can use a plugin that leverages an LLM to generate SQL queries dynamically. Here’s how the system prompt might look:

You are an expert in SQL. Given a database schema and a natural language question, generate a valid SQL query that answers the question using only the provided schema.

- Output only the SQL query as a string, with no explanations or extra text.

- If the question cannot be answered with the given schema, return: `SELECT NULL;`

- Ensure the query is syntactically correct and relevant to the question.

Schema and question will be provided below.

Schema:

CREATE TABLE Products (

id INT PRIMARY KEY,

model_name VARCHAR(255),

description VARCHAR,

bike_type VARCHAR(50),

price DECIMAL(10, 2),

created_at DATETIME

);

Question: {question}

SQL Query:";

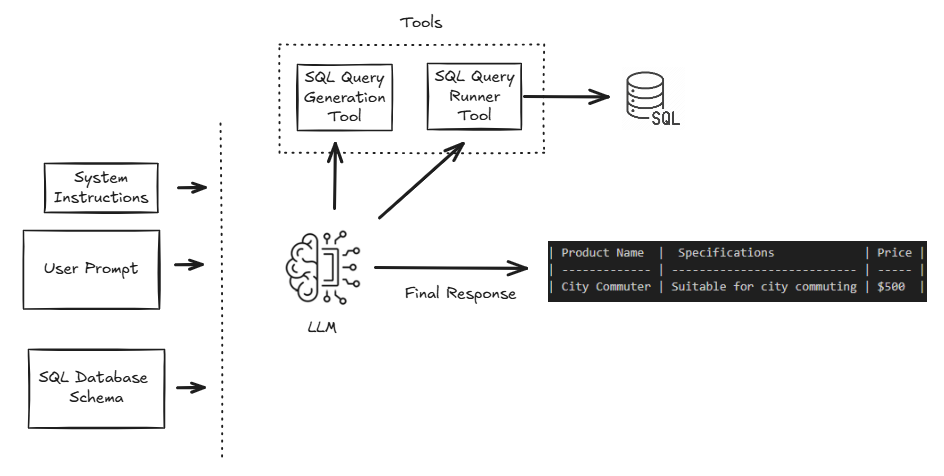

How does the LLM generate and use SQL?

Here’s how the flow works:

Step 1: The user asks a question in natural language.

Step 2: The LLM generates a plan to use the SQL plugin for generating the SQL query.

Step 3: The LLM generates the SQL query based on the schema and user request.

Step 4: The application executes the SQL against the database, fetches the results, and presents them to the user in a readable format. Here

See it in action:

You can find a .NET notebook on my GitHub respository 02-semantic-kernel-dynamic-sql-query that demonstrates natural language to SQL query generation using Semantic Kernel and Azure OpenAI.

Adding Security and Validation

To ensure the generated SQL is safe and valid, we can implement several strategies:

- Sandboxing: Execute the SQL in a controlled environment to limit its impact on the database.

- Limited Schema Access: Restrict the LLM’s access to only the necessary tables and columns in the database schema.

- Validation: Validate the generated SQL against a set of rules or patterns to ensure it adheres to expected formats.

- Logging and Monitoring: Log all generated SQL queries and monitor for unusual patterns or potential abuse.

Conclusion

By enabling dynamic SQL generation, we can make RAG systems much more flexible and powerful. The AI assistant can now answer a wide range of questions, limited only by the database schema and the LLM’s ability to generate correct SQL. This approach reduces the need for manual function creation and makes it easier to support new types of queries as user needs evolve.

However, this method also introduces new challenges, such as ensuring the generated SQL is safe (avoiding SQL injection) and accurate. Careful validation and sandboxing are essential when executing dynamically generated queries.